Highlights 🎉

Jan v0.6.7 brings full support for OpenAI’s groundbreaking open-weight models - gpt-oss-120b and gpt-oss-20b - along with enhanced MCP documentation and critical bug fixes for reasoning models.

🚀 OpenAI gpt-oss Models Now Supported

Jan now fully supports OpenAI’s first open-weight language models since GPT-2:

gpt-oss-120b:

- 117B total parameters, 5.1B active per token

- Runs efficiently on a single 80GB GPU

- Near-parity with OpenAI o4-mini on reasoning benchmarks

- Exceptional tool use and function calling capabilities

gpt-oss-20b:

- 21B total parameters, 3.6B active per token

- Runs on edge devices with just 16GB memory

- Similar performance to OpenAI o3-mini

- Perfect for local inference and rapid iteration

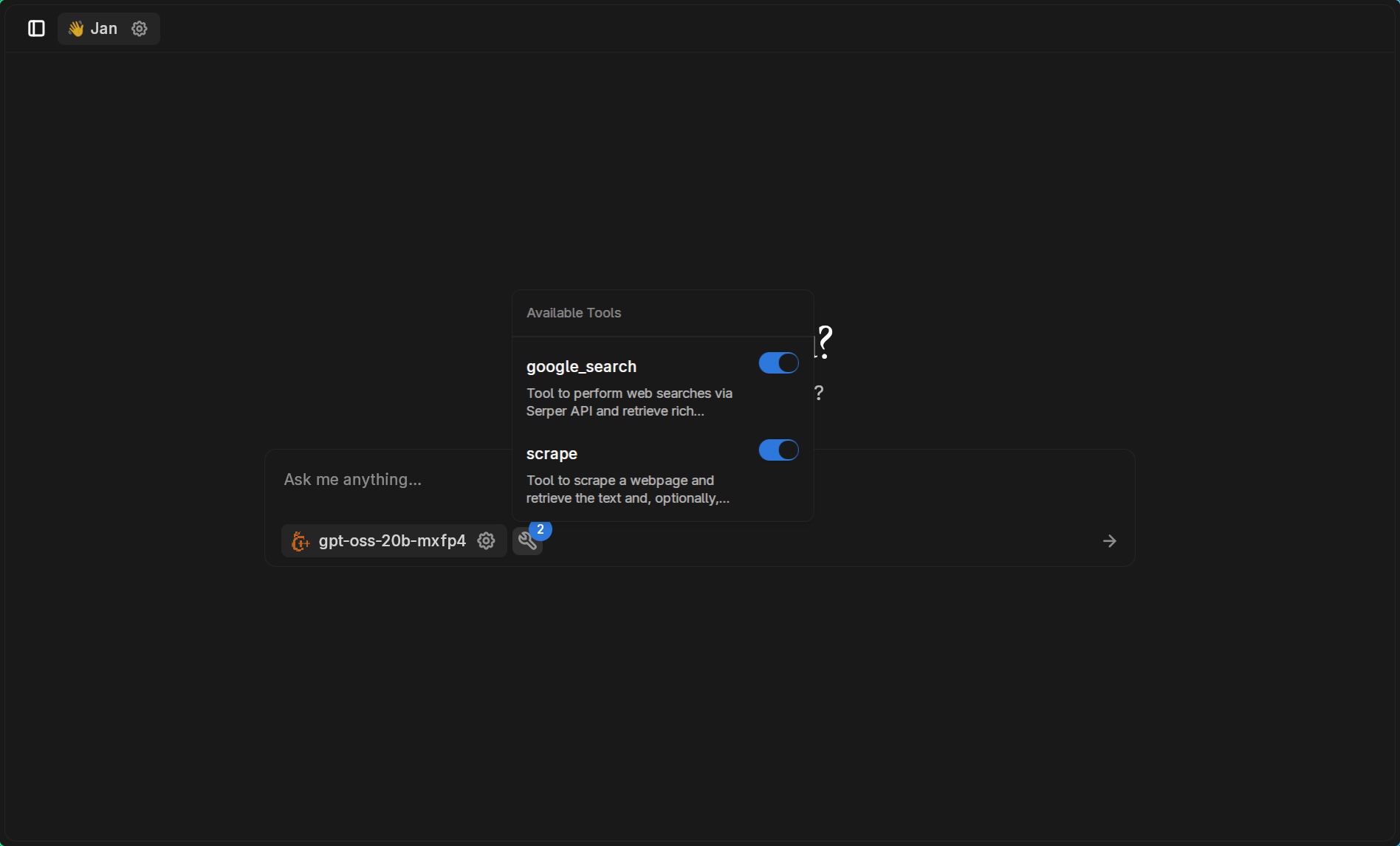

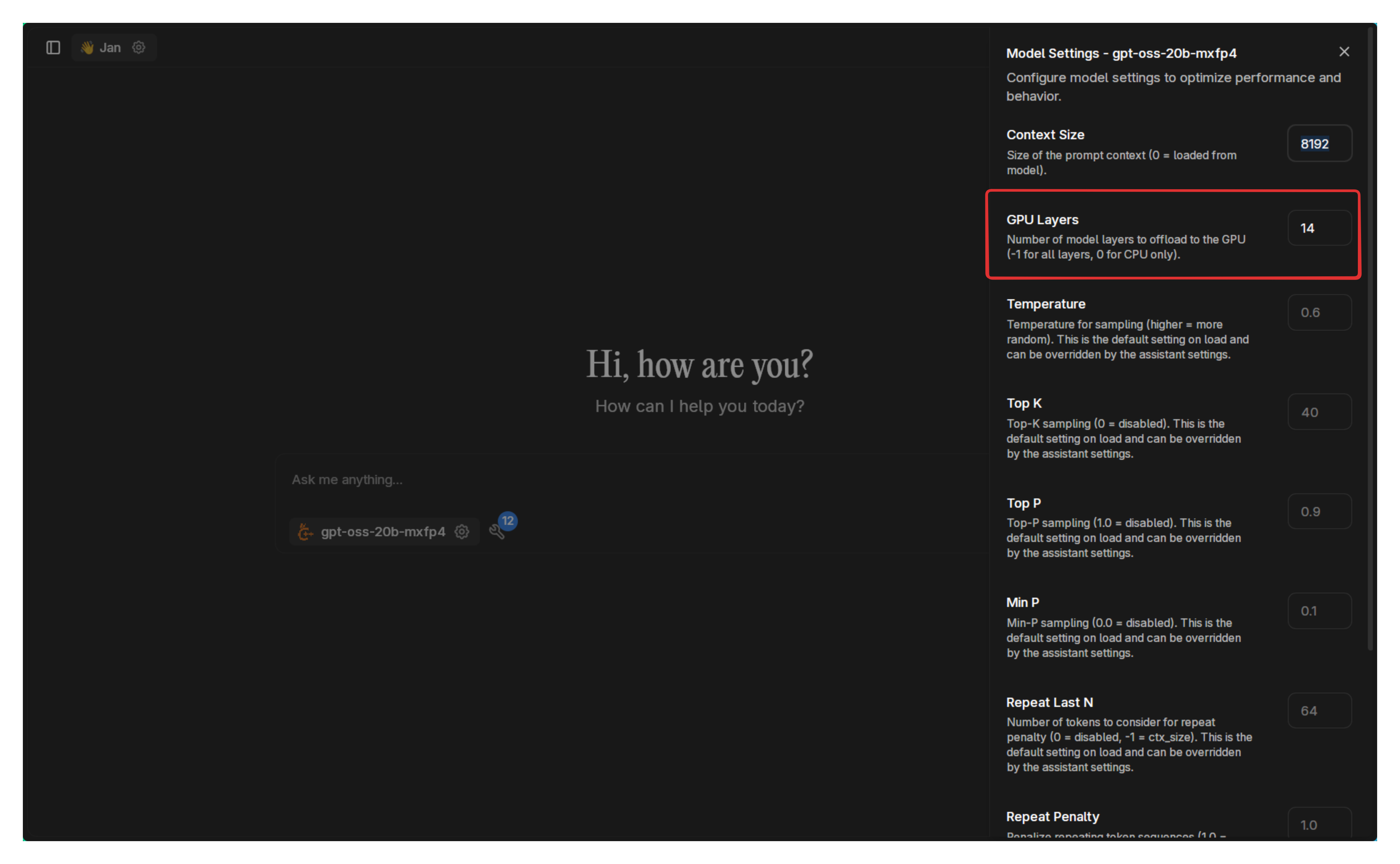

🎮 GPU Layer Configuration

Due to the models’ size, you may need to adjust GPU layers based on your hardware:

Start with default settings and reduce layers if you encounter out-of-memory errors. Each system requires different configurations based on available VRAM.

📚 New Jupyter MCP Tutorial

We’ve added comprehensive documentation for the Jupyter MCP integration:

- Real-time notebook interaction and code execution

- Step-by-step setup with Python environment management

- Example workflows for data analysis and visualization

- Security best practices for code execution

- Performance optimization tips

The tutorial demonstrates how to turn Jan into a capable data science partner that can execute analysis, create visualizations, and iterate based on actual results.

🔧 Bug Fixes

Critical fixes for reasoning model support:

- Fixed reasoning text inclusion: Reasoning text is no longer incorrectly included in chat completion requests

- Fixed thinking block display: gpt-oss thinking blocks now render properly in the UI

- Fixed React state loop: Resolved infinite re-render issue with useMediaQuery hook

Using gpt-oss Models

Download from Hub

All gpt-oss GGUF variants are available in the Jan Hub. Simply search for “gpt-oss” and choose the quantization that fits your hardware:

Model Capabilities

Both models excel at:

- Reasoning tasks: Competition coding, mathematics, and problem solving

- Tool use: Web search, code execution, and function calling

- CoT reasoning: Full chain-of-thought visibility for monitoring

- Structured outputs: JSON schema enforcement and grammar constraints

Performance Tips

- Memory requirements: gpt-oss-120b needs ~80GB, gpt-oss-20b needs ~16GB

- GPU layers: Adjust based on your VRAM (start high, reduce if needed)

- Context size: Both models support up to 128k tokens

- Quantization: Choose lower quantization for smaller memory footprint

Coming Next

We’re continuing to optimize performance for large models, expand MCP integrations, and improve the overall experience for running cutting-edge open models locally.

Update your Jan or download the latest.

For the complete list of changes, see the GitHub release notes.