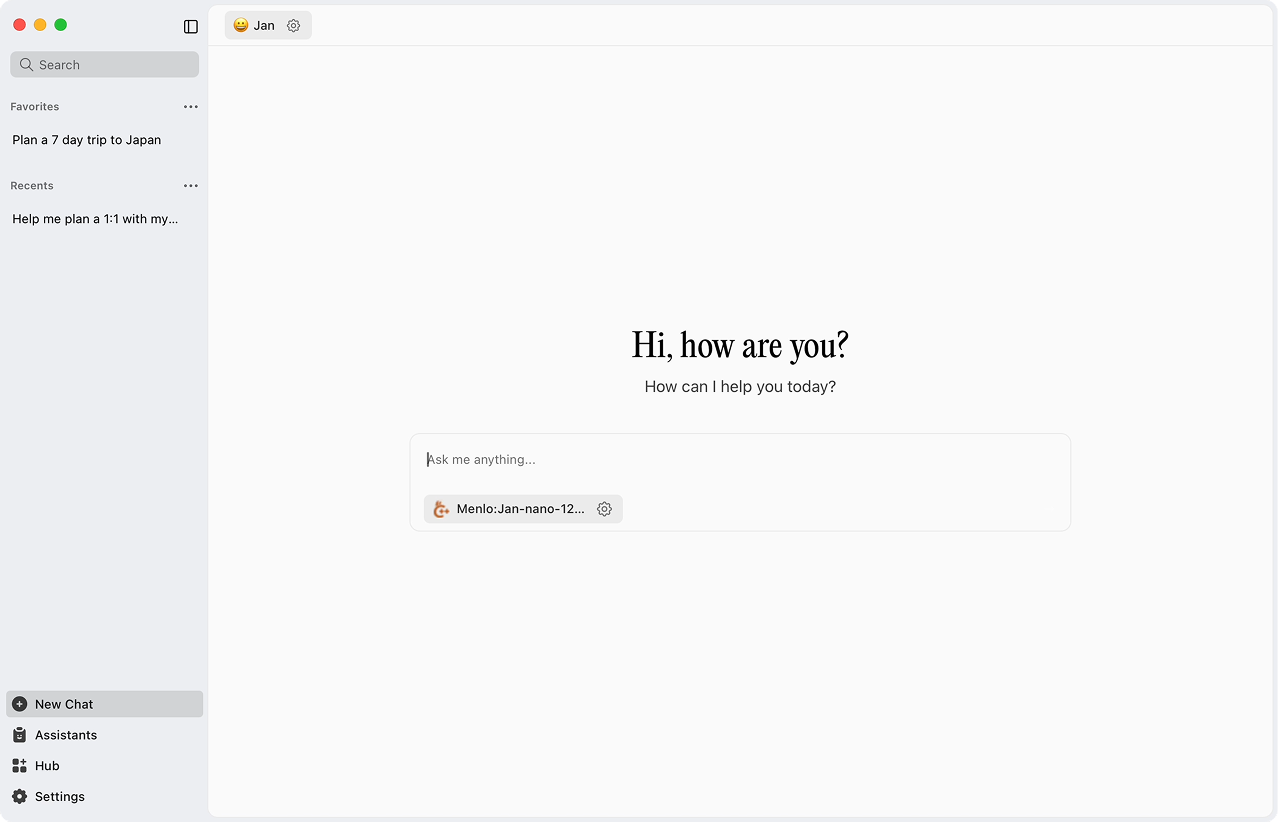

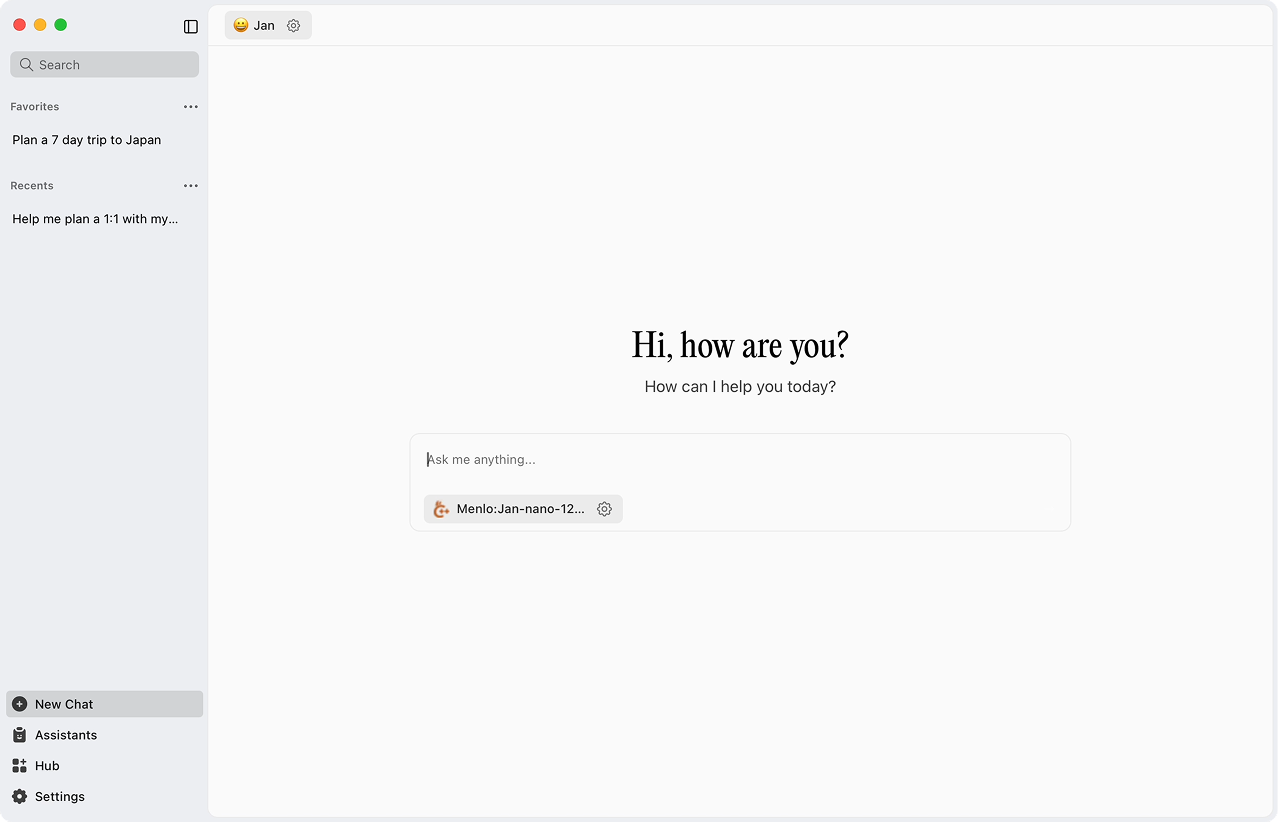

Ask Jan

The best of open-source AI in an easy-to-use product.

Over 4 million downloads

If you are not using Jan with Jan-V1-4B, drop anything you are doing and install it now! It's a little Open gem.

BTW: yes, I'm an AC Milan fan 😢

DeepJan in action: Conducting DeepResearch at home. Local models are taking the lead.

Jan-V1 is seriously good, Apple should consider using this to power Siri. You just don’t need expensive closed models anymore for many tasks, open models have come a long way.

Jan is an amazing tool that is open source and you can deploy locally.

If you haven’t tried it already be sure to do so.

Thanks goes out to the @jandotai team!

🚨 Imagine Perplexity Pro — but open-source, private, and running fully on your machine.

✅ No API bills

✅ Real-time web search

✅ Your data stays with you

Meet jan.ai . 🔒✨

#JanAI #OpenSource #Privacy

Running R1 locally on my laptop using @jandotai, asked it to imagine Nietszche is talking with Dostoyevsky and Spengler about the future of European civilization. They decide to express the core of their argument in haiku format.

Jan did a web search first, then deployed the

I really really like @jandotai

It's a very friendly app to locally run LLMs, great for privacy

I've tried others like LM Studio and Ollama and they're nice but very engineer-built, a bit too difficult for me

Jan is simple and cute and pretty and a great alternative to talk to

Jan (open source) has the potential to become a much better interface to models than anything model providers can build.

Just tried @jandotai today with @nebiusaistudio models

It's impressive, tested with @Zai_org and @Alibaba_Qwen models

Fully open source and lightweight, Web search and MCP options are also available.

well my (Astrodevil) assistant mode has some opinion on Elon vs Sama fight as

Great to see @jandotai doing their thing. They were quietly building all this time. Some of us caught on to them early on. One of the cleanest interfaces and local implementations of AI. Great Job! 👏

MLX q4 - llamacpp q4_k_m head to head with Jan-V1-4B using Jan client. Default settings.

🥇 MLX: 90 toks/s (88-96 min-max)

🥈 llama cpp + flash attn + kv q8_0 67 toks/s (64-69 min-max)

🥉 llamacpp: 49 toks/s (47-51 min-max)

Here video GGUF followed by MLX

With your Hugging Face PRO account you can now use trending models directly in one of the most beautiful native chat app ever made (Jan).

1⃣ Go to Settings -> Providers -> Hugging Face

2⃣ Click on "+" then copy/paste a model id

3⃣ Start chatting 🚀

If you are not using Jan with Jan-V1-4B, drop anything you are doing and install it now! It's a little Open gem.

BTW: yes, I'm an AC Milan fan 😢

DeepJan in action: Conducting DeepResearch at home. Local models are taking the lead.

Jan-V1 is seriously good, Apple should consider using this to power Siri. You just don’t need expensive closed models anymore for many tasks, open models have come a long way.

Jan is an amazing tool that is open source and you can deploy locally.

If you haven’t tried it already be sure to do so.

Thanks goes out to the @jandotai team!

🚨 Imagine Perplexity Pro — but open-source, private, and running fully on your machine.

✅ No API bills

✅ Real-time web search

✅ Your data stays with you

Meet jan.ai . 🔒✨

#JanAI #OpenSource #Privacy

Running R1 locally on my laptop using @jandotai, asked it to imagine Nietszche is talking with Dostoyevsky and Spengler about the future of European civilization. They decide to express the core of their argument in haiku format.

Jan did a web search first, then deployed the

I really really like @jandotai

It's a very friendly app to locally run LLMs, great for privacy

I've tried others like LM Studio and Ollama and they're nice but very engineer-built, a bit too difficult for me

Jan is simple and cute and pretty and a great alternative to talk to

Jan (open source) has the potential to become a much better interface to models than anything model providers can build.

Just tried @jandotai today with @nebiusaistudio models

It's impressive, tested with @Zai_org and @Alibaba_Qwen models

Fully open source and lightweight, Web search and MCP options are also available.

well my (Astrodevil) assistant mode has some opinion on Elon vs Sama fight as

Great to see @jandotai doing their thing. They were quietly building all this time. Some of us caught on to them early on. One of the cleanest interfaces and local implementations of AI. Great Job! 👏

MLX q4 - llamacpp q4_k_m head to head with Jan-V1-4B using Jan client. Default settings.

🥇 MLX: 90 toks/s (88-96 min-max)

🥈 llama cpp + flash attn + kv q8_0 67 toks/s (64-69 min-max)

🥉 llamacpp: 49 toks/s (47-51 min-max)

Here video GGUF followed by MLX

With your Hugging Face PRO account you can now use trending models directly in one of the most beautiful native chat app ever made (Jan).

1⃣ Go to Settings -> Providers -> Hugging Face

2⃣ Click on "+" then copy/paste a model id

3⃣ Start chatting 🚀

If you are not using Jan with Jan-V1-4B, drop anything you are doing and install it now! It's a little Open gem.

BTW: yes, I'm an AC Milan fan 😢

DeepJan in action: Conducting DeepResearch at home. Local models are taking the lead.

Jan-V1 is seriously good, Apple should consider using this to power Siri. You just don’t need expensive closed models anymore for many tasks, open models have come a long way.

Jan is an amazing tool that is open source and you can deploy locally.

If you haven’t tried it already be sure to do so.

Thanks goes out to the @jandotai team!

🚨 Imagine Perplexity Pro — but open-source, private, and running fully on your machine.

✅ No API bills

✅ Real-time web search

✅ Your data stays with you

Meet jan.ai . 🔒✨

#JanAI #OpenSource #Privacy

Running R1 locally on my laptop using @jandotai, asked it to imagine Nietszche is talking with Dostoyevsky and Spengler about the future of European civilization. They decide to express the core of their argument in haiku format.

Jan did a web search first, then deployed the

I really really like @jandotai

It's a very friendly app to locally run LLMs, great for privacy

I've tried others like LM Studio and Ollama and they're nice but very engineer-built, a bit too difficult for me

Jan is simple and cute and pretty and a great alternative to talk to

Jan (open source) has the potential to become a much better interface to models than anything model providers can build.

Just tried @jandotai today with @nebiusaistudio models

It's impressive, tested with @Zai_org and @Alibaba_Qwen models

Fully open source and lightweight, Web search and MCP options are also available.

well my (Astrodevil) assistant mode has some opinion on Elon vs Sama fight as

Great to see @jandotai doing their thing. They were quietly building all this time. Some of us caught on to them early on. One of the cleanest interfaces and local implementations of AI. Great Job! 👏

MLX q4 - llamacpp q4_k_m head to head with Jan-V1-4B using Jan client. Default settings.

🥇 MLX: 90 toks/s (88-96 min-max)

🥈 llama cpp + flash attn + kv q8_0 67 toks/s (64-69 min-max)

🥉 llamacpp: 49 toks/s (47-51 min-max)

Here video GGUF followed by MLX

With your Hugging Face PRO account you can now use trending models directly in one of the most beautiful native chat app ever made (Jan).

1⃣ Go to Settings -> Providers -> Hugging Face

2⃣ Click on "+" then copy/paste a model id

3⃣ Start chatting 🚀

Best of open-source AI in one app

1

Models

Choose from open models or plug in your fav online model.

2

Connectors

Connect your email, files, notes and calendar. Jan works where you work.

3

Memory Coming Soon

Your context carries over, so you don’t repeat yourself. Jan remembers your context and preferences.

Joe

Designer, Singapore

Things to remember

- •Prefers clean, minimal layouts

- •Working on a portfolio redesign

- •Likes concise explanations

- •Often asks about Figma and prototyping tools

- •Shares work in dark mode

- •Interested in typography trends

Ask Jan,

get things done